Deep Learning based reach-and-grasp EEG decoder

EEG-based neurotechnology for motor rehabilitation © Bitbrain

EEG-based neurotechnology for motor rehabilitation © Bitbrain

Decoding three different executed reach-and-grasp actions utilizing their electroencephalogram (EEG) recording from different electrodes is of crutial significance for the rehabilitation of hand functions of patients with motor disorders [1]. Despite the high freedom of the human hand movements, most actions of daily life can be executed incorporating only palmar, lateral and grasp. Recent studies have already shown that neural correlates of natural reach-and-grasp actions can be identified in the EEG [1][2].

Deep Learning has recently achieved promising results in the field of Computer Vision and Biomedical Engineering. Therefore, this work aims to study the possibility of develop Deep learning based decoders to classify grasp actions based on EEG signals. We have also studied the possibility of developing intersubject classifiers and transfer learning between the different subject technologies. For this purpose, different neural network architectures have been tested, single trial vs crop trial performance has been evaluated as well as the different training techniques: within-subject and inter-subject training.

1-EEG Introduction

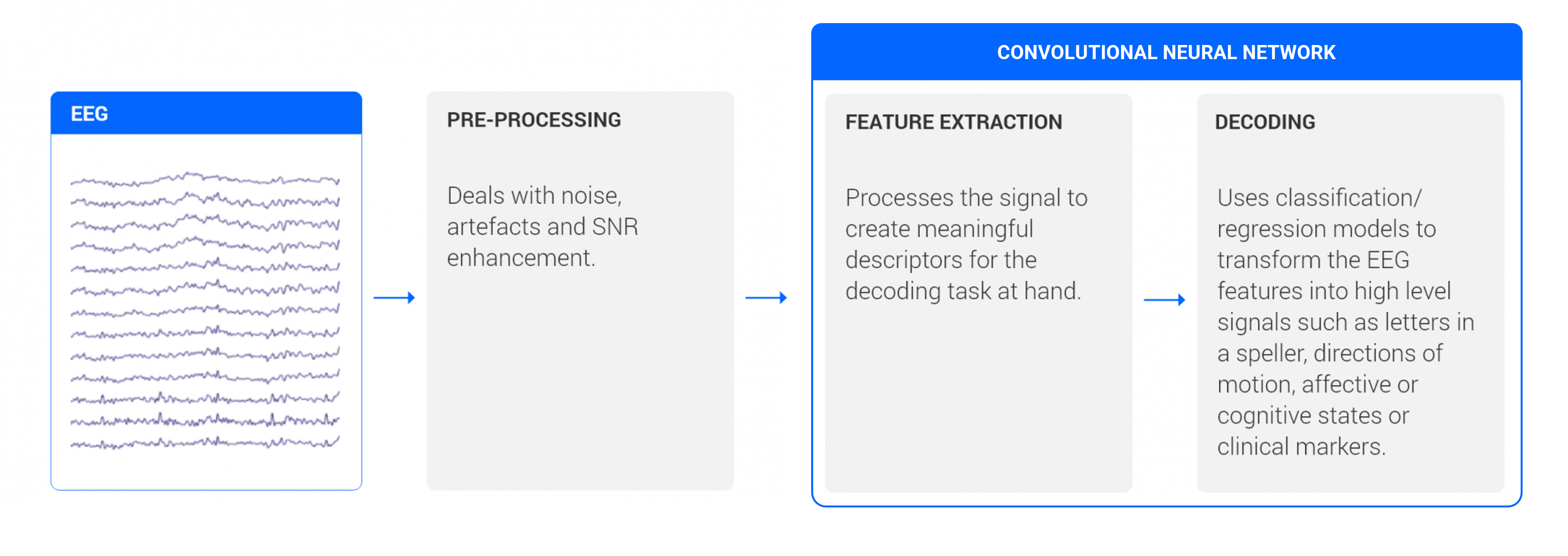

The EEG is a cost-effective, non-invasive technique to examine brain activity linked to multiple neurocognitive processes that underlie human behavior. It consists of placing electrodes on the head to monitor the electrical activity produced when neurons fire. The EEG records and measures electrical signals of the human brain from multiple cortical areas. Therefore, EEG monitoring allows to quantify different types of brain waves, also known as neural oscillations. The standard pipeline followed to extract information is depicted in the next figure:

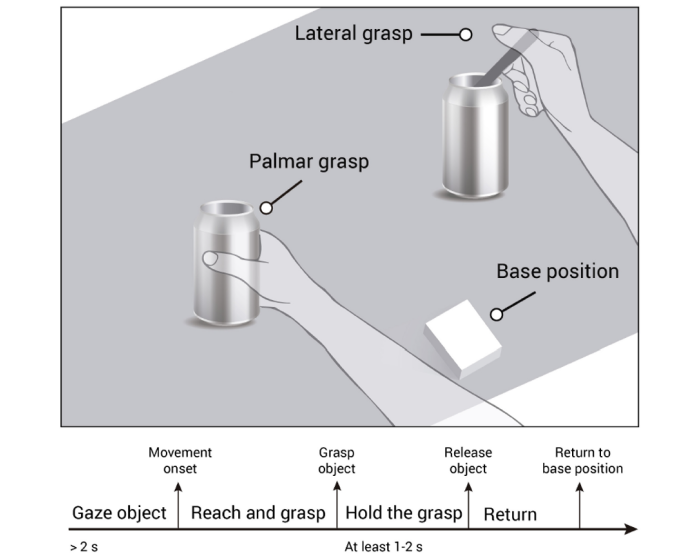

2-Dataset

In a cue-guided experiment, 15 healthy individuals were asked to perform reach-and-grasp actions using daily life objects. The dataset is publicly available at BNCI Horizon 2020. The pre-recorded dataset contains 7 min runs, leading to 80 trials per condition (TPC) distributed over 4 runs / 20 trials for each reach-and-grasp condition and from a no-movement condition. The 45 right handed participants performed two self-initiated reach-and-grasp (palmar and lateral grasp) movement conditions.

- Gel-based electrodes recordings. EEG was measured with 58 electrodes (frontal, central and parietal areas).

- Water-based electrodes recordings mobile and water-based electrodes EEG-Versatile™ system with 32 electrodes

- Dry-electrodes recordings measured using the dry-electrodes EEG-Hero™ headset. EEG was measured with 11 electrodes over the sensorimotor cortex.

Data Proprocesssing

The EEG data processsing was analogous to the one in [2]. All the modalities data were filtered with a zero-phase 4th order Butterworth filter with a cut-off frequency of 0.3 and resample to 128 Hz. We defined a window of interest for each movement trial of [-2 3] s with respect to the movement onset at second 0. In addition, we also extracted 81 rest trials from inactivity periods with a duration of 5 seconds.

3-Methods

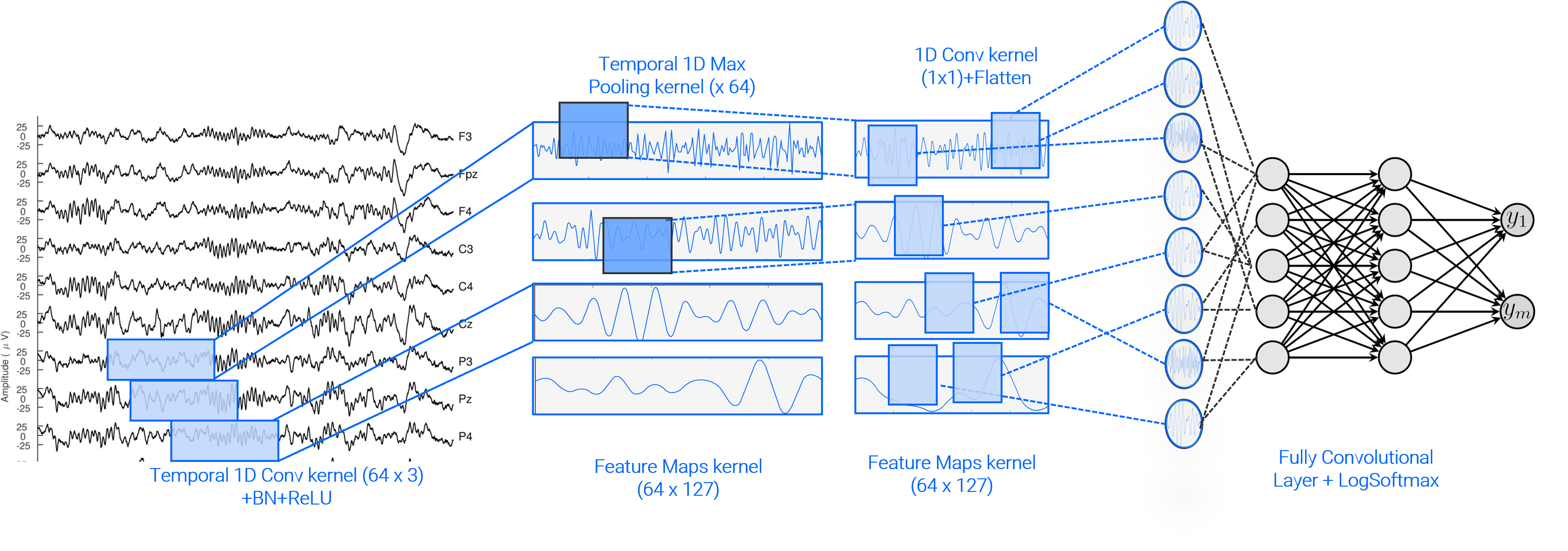

Vanilla 1D Network

We aimed to design a single convolutional neural network (CNN) architecture to accurately classify grasp actions from differente EEG decoding modalitites, while being as compact and simple as possible. We try a simple vanilla 1D convolutional neural network based on 1D temporal convolutions in order to encapsulate EEG feature extraction methodologies used in traditional classiers [2]

EEGNet [3]

EEGNet is a compact CNN designed for BCIs that can be trained with very limited data. The architecture has three convolution layers:

- a one-dimensional convolution analogous to temporal band-pass filtering

- a depthwise convolution to perform spatial filtering,

- a separable convolution to identify temporal patterns across the previous filters

HTNet architecture [4]

HTNet builds upon EEGNet [3]. The authors added a Hilbert transform layer after this initial temporal convolution to compute relevant spectral power features using a data-driven filter-Hilbert. The temporal convolution and Hilbert transform layers generate data-driven spectral features that can then be projected from electrodes onto common regions of interest using a predefined weight matrix.

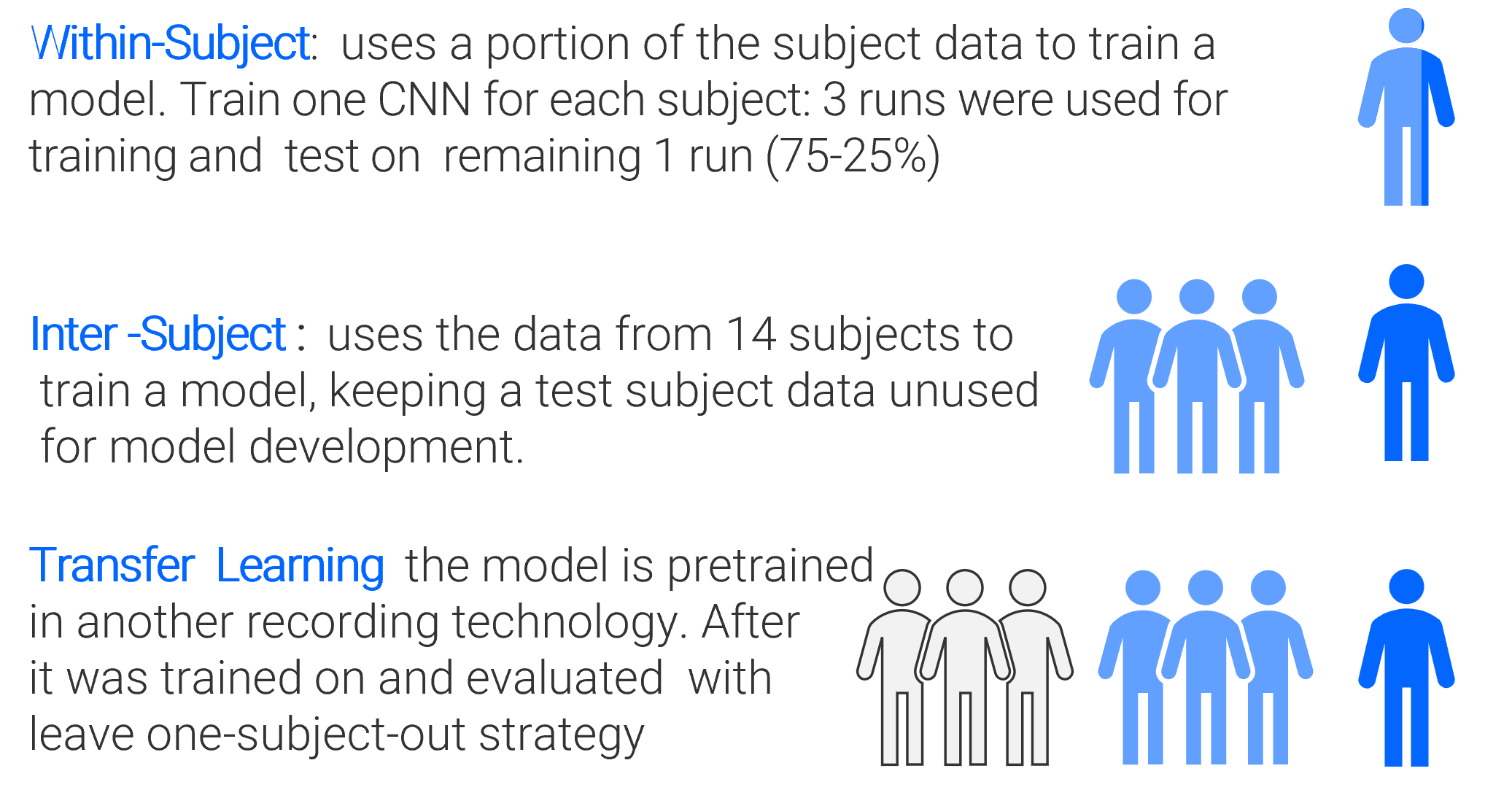

Training Strategies

Transfer learning techniques from the field of machine learning have been adopted also for EEG feature distribution for inter-subject variability. The common cross-validation strategy used in EEG decoding is known as “leave one-subject-out”. Given the N subjects, the training subset is fromed by N - 1, while the remaining subject is used for testing. Classiffcation results are reported for differente training stratesgies: within-subject, inter-subject and with pretraining in another recording technology.

Data Augmentation

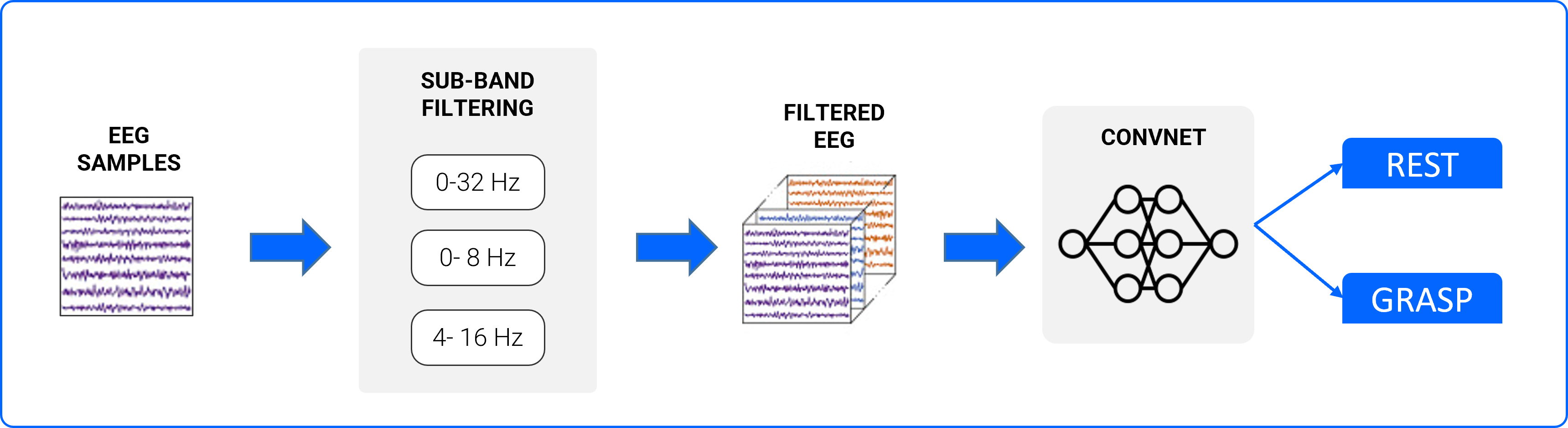

Data augmentation refers to techniques used to increase the amount of data by slightly modifying training data. Data augmentation is especially useful for EEG signals where the limitation of small-scale datasets greatly affects the performance of classifiers. Still due to the variability of EEG and time-series nature, it is challenging to augment the data in the feature space. Based on the findings of [2], we implemented an easy on-the-fly data augmentation that consist on band filtering the training data. The aim is to enforce the network to learn different features at different frequency bands.

4-Results

In a single-trial multiclass based decoding approach, which incorporated both movement conditions and rest

can be successfully decodes using Deep learning based decoders.

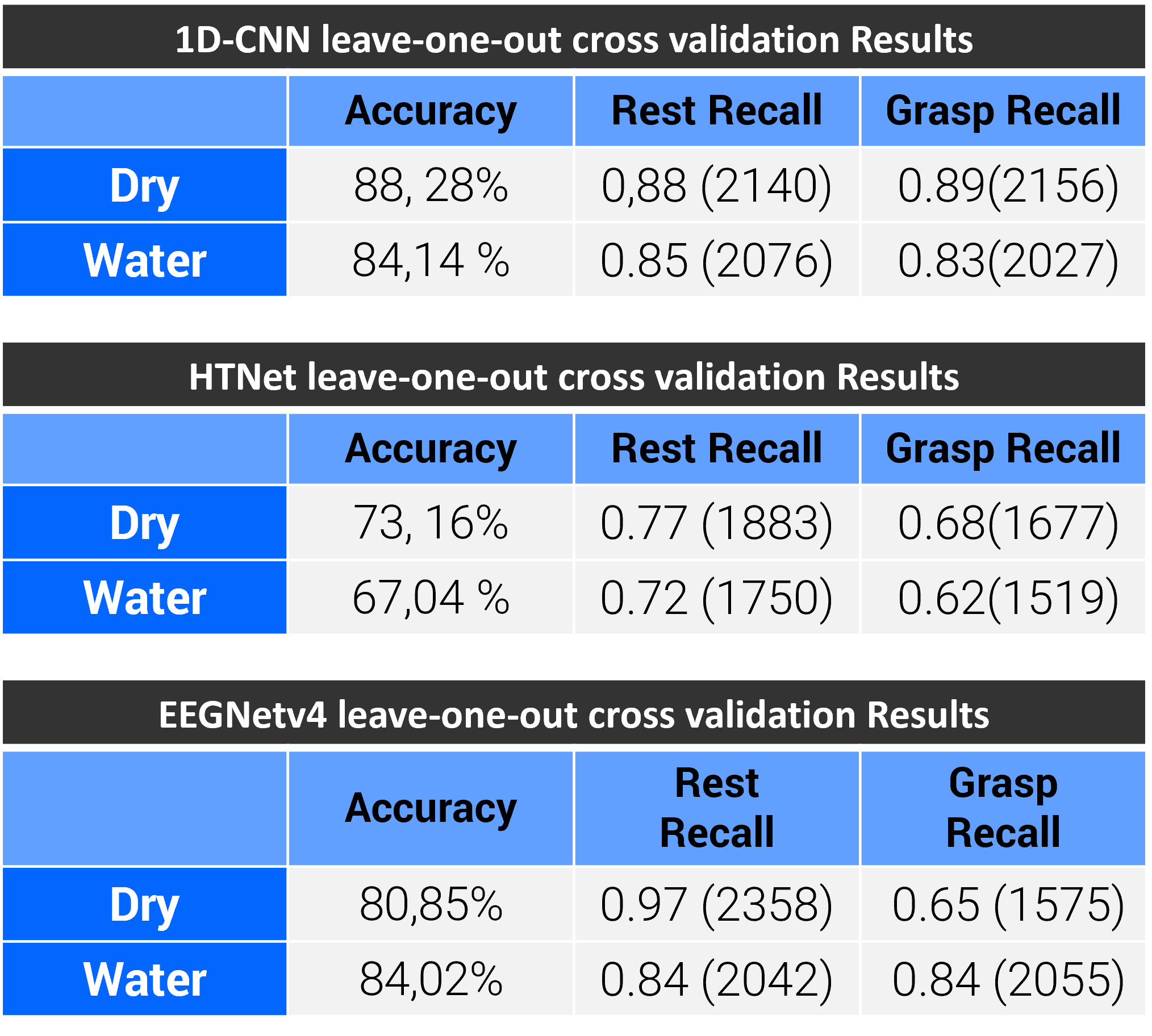

We performed a comparison on the decoding accuracy for single trial of 2 seconds on the state-of-the-art network architecture on the time of the study. Table depicts the inter-participant classification results.

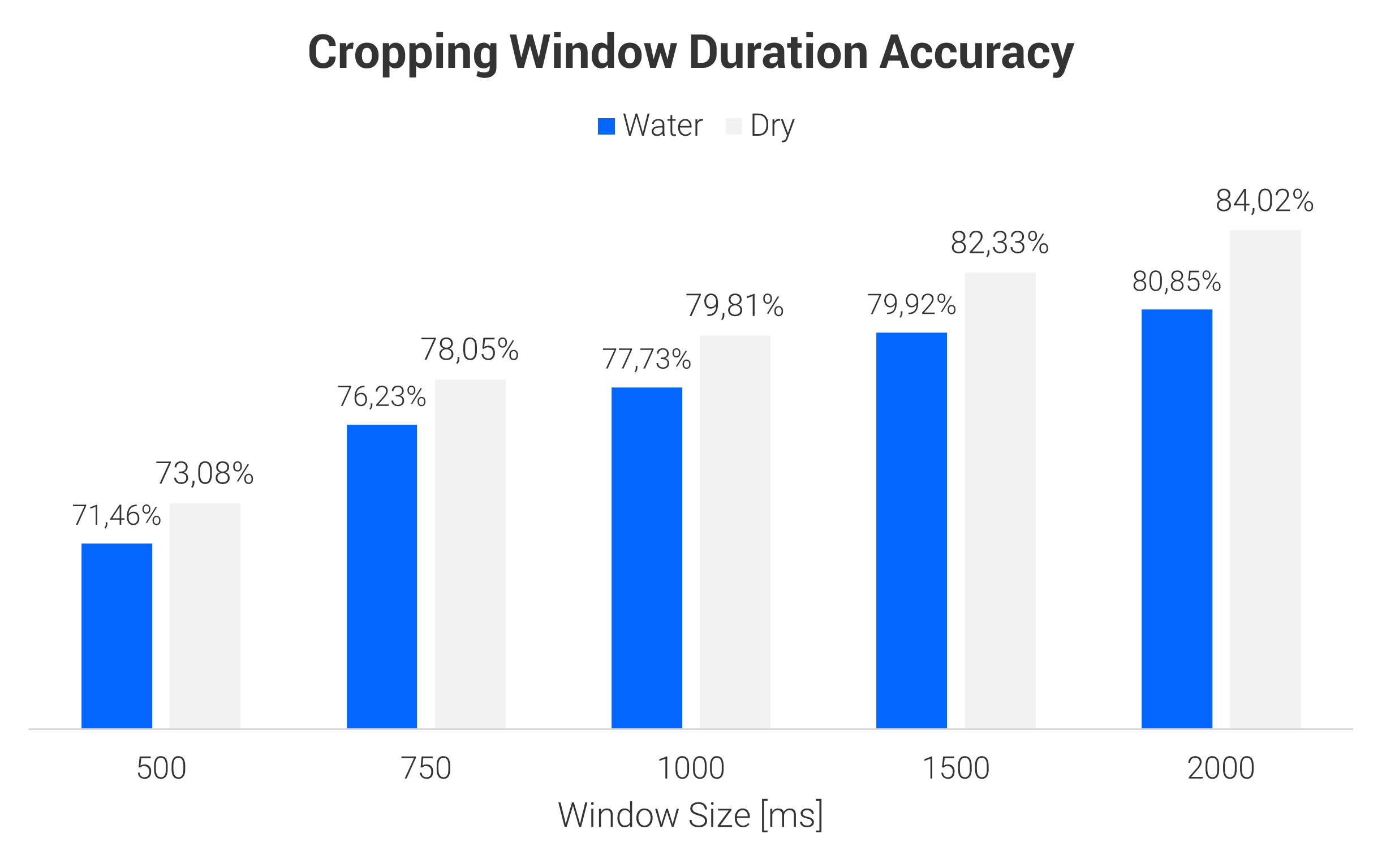

The effect of cropping window duration was investigated. We can conlcude that longer signal windows show better performance.

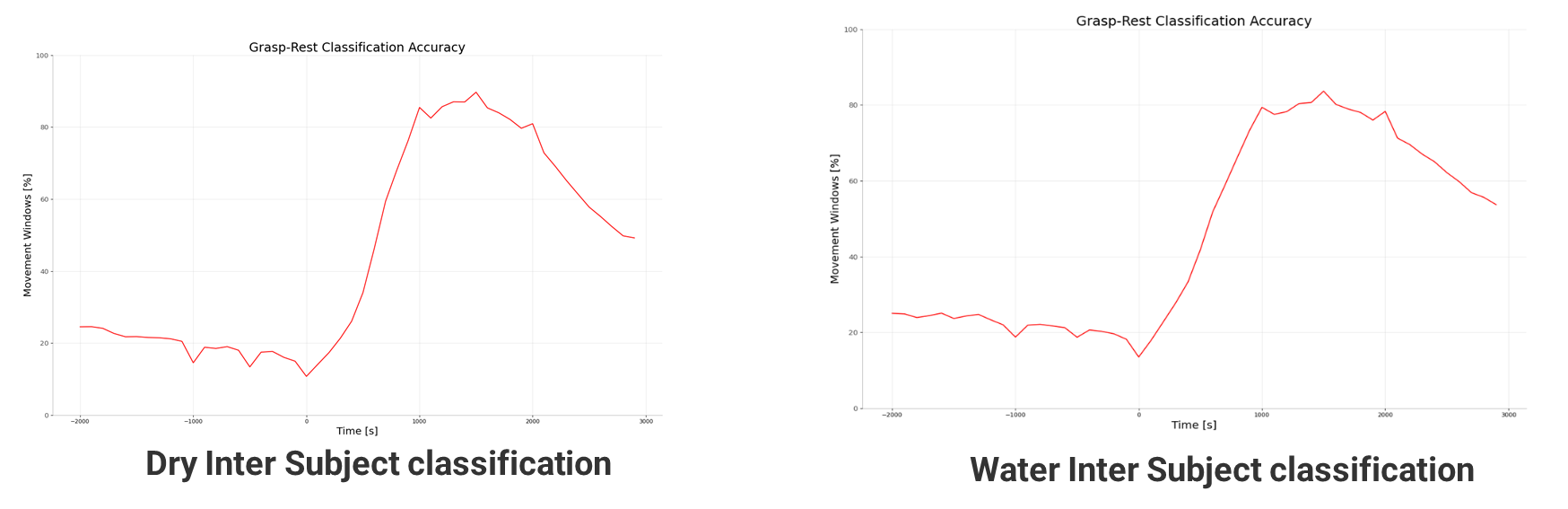

The best results were achieved when training the model with a cropped window T =[0,1] with overlapping strides 250ms. The models were pretrained in another recording technology resampled to 128 Hz with split frequencies data augmentation strategy. On average, best classification performance could be reached 1s after the movement onset.

Despite the reduced number of channels of the dry electrodes recordings, the average performance was not decreased significantly as it can be seen in the above figure.

5-Conclusion

This study confirmed that EEG based correlates of reach-and-grasp actions can be successfully identified using Deep Leaning based decoders. We demonstrated that a simple, yet effective, 1D convolution CNN can reach state-of-the-art neural decoders when and improve the results appliying the mmodes to new participants, even when a different recording modality is used. Unfortunately, a direct comparison to other reach-and-grasp studies such as is difficult due significant differences in experimental setup and paradigm and hence cannot be made in a serious manner.

References

[1] Schwarz A, Ofner P, Pereira J, Sburlea AI, Müller-Putz GR. Decoding natural reach-and-grasp actions from human EEG. J Neural Eng. 2018 Feb;15(1):016005. doi: 10.1088/1741-2552/aa8911. PMID: 28853420.

[2] Schwarz, A., Escolano, C., Montesano, L., & Müller-Putz, G. (2020). Analyzing and Decoding Natural Reach-and-Grasp Actions Using Gel, Water and Dry EEG Systems. Frontiers in Neuroscience, 14.

[3] Lawhern V J, Solon A J, Waytowich N R, Gordon S M, Hung C P and Lance B J 2018 Eegnet: a compact convolutional neural network for EEG-based brain–computer interfaces J. Neural Eng. 15 056013

[4] Peterson, S. M., Steine-Hanson, Z., Davis, N., Rao, R. P. N., & Brunton, B. W. (2021). Generalized neural decoders for transfer learning across participants and recording modalities. Journal of Neural Engineering. https://doi.org/10.1088/1741-2552/abda0b